Deep learning state of the art 2020 (MIT Deep Learning Series) - Part 1

02 Apr 2020 | deep learning data scienceThis is one of talks in MIT deep learning series by Lex Fridman on state of the art developments in deep learning. In this talk, Fridman covers achievements in various application fields of deep learning (DL), from NLP to recommender systems. It is a very informative talk encompassing diverse facets of DL, not just technicalities but also issues regarding people, education, business, policy, and ethics. I encourage anyone interested in DL to watch the video if time avails. For those who do not have enough time or want to review the contents, I summarized the contents in this posting and provided hyperlinks to additional materials. Since it is a fairly long talk with a great amount of information, this posting will be about the first part of the talk, until the natural language processing (NLP) part.

- YouTube Link to the lecture video

About the speaker

Lex Fridman is AI researcher having primary interests in human-computer interaction, autonomous vehicles, and robotics at MIT. He also hosts podcasts with leading researchers and practitioners in information technology such as Elon Musk and Andrew Ng.

Below is the summarization of his talk.

AI in the context of human history

The dream of AI

“AI began with an ancient wish to forge the gods” - Pamela McCorduck, Machines Who Think (1979)

DL & AI in context of human history

Dreams, mathematical foundations, and engineering in reality

“It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. They would be able to converse with each other to sharpen their wits. At some stage therefore, we should have to expect the machines to take control” - Alan Turing, 1951

- Frank Rosenblatt, Perceptron (1957, 1962)

- Kasparov vs. Deep Blue (1997)

- Lee vs. Alphago (2016)

- Robots and autonomous vehicles

History of DL ideas and milestones

- 1943: Neural networks (Pitts and McCulloch)

- 1957-62: Perceptrons (Rosenblatt)

- 1970-86: Backpropagation, RBM, RNN (Linnainmaa)

- 1979-98: CNN, MNIST, LSTM, Bidirectional RNN (Fukushima, Hopfield)

- 2006: “Deep learning”, DBN

- 2009: ImageNet + AlexNet

- 2014: GANs

- 2016-17: AlphaGo, AlphaZero

- 2017-19: Transformers

Deep learning celebrations, growth, and limitations

Turing award for DL

- Yann LeCun, Geoff Hinton, and Yoshua Bengio wins Turing award (2018)

“The conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing”

Early figures in DL

- 1943: Walter Pitts & Warren McCulloch (computational model for neural nets)

- 1957, 1962: Frank Rosenblatt (perceptron with single- & multi-layer)

- 1965: Alexey Ivakhnenko & V. G. Lapa (learning algorithm for MLP)

- 1970: Seppo Linnainmmaa (backpropagation and automatic differentiation)

- 1979: Kunihiko Fukushima (convolutional neural nets)

- 1982: John Hopfield (Hopfield networks, i.e., recurrent neural nets)

People of DL & AI

- History of science = story of people & ideas

- Deep Learning in Neural Networks: An Overview by Jurgen Schmidhuber

Lex’s hope for the community

- More respect, open-mindedness, collaboration, credit sharing

- Less derision, jealousy, stubbornness, academic silos

Limitations of DL

DL criticism

- In 2019, it became cool to say that DL has limitations

- “By 2020, the popular press starts having stories that the era of Deep Learning is over” (Rodney Brooks)

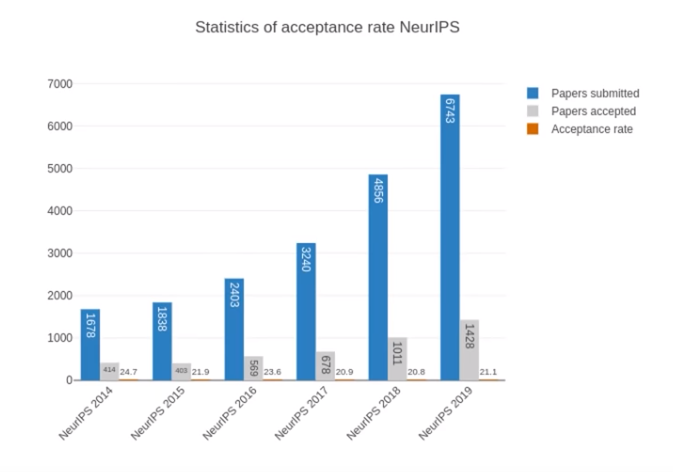

Growth in DL community

Hopes for 2020

Less hype & less anti-hype

Hybrid research

Research topics

- Reasoning

- Active learning & life-long learning

- Multi-modal & multi-task learning

- Open-domain conversation

- Applications: medical, autonomous vehicles

- Algorithmic ethics

- Robotics

DL and deep reinforcement learning frameworks

DL frameworks

Tensorflow (2.0)

- Eager execution by default

- Keras integration

- TensorFlow.js, Tensorflow Lite, TensorFlow Serving, …

PyTorch (1.3)

- TorchScript (graph representation)

- Quantization

- PyTorch Mobile

- TPU support

RL frameworks

- Tensorflow: OpenAI Baselines (Stable Baselines), TensorForce, Dopamine (Google), TF-Agents, TRFL, RLLib (+Tune), Coach

- Pytorch: Horizon, SLM-lab

- Misc: RLgraph, Keras-RL

Hopes for 2020

Framework-agnostic research

Make easier to translate from PYtorch to TensorFlow and vice versa

Mature deep RL frameworks

Converge to fewer, actively-developed, stable RL frameworks less tied to TF or PyTorch

Abstractions

Build higher abstractions, e.g., Keras, fastai, to empower people outside the ML community

Natural Language Processing

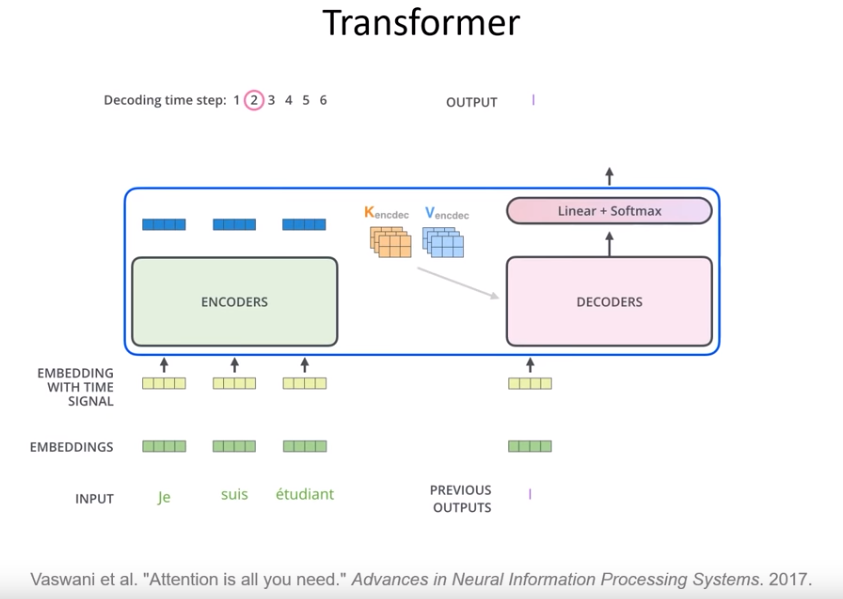

Transformer

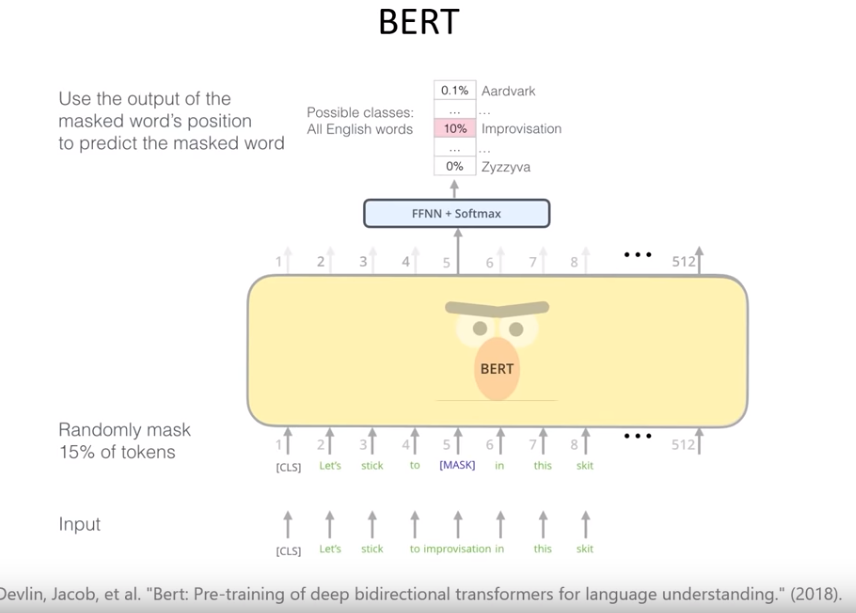

BERT

State of the art performances in various NLP tasks, e.g., sentence classification and question answering

Transformer-based language models (2019)

- BERT, XLNET, RoBERTa, DistilBERT, CTRL, GPT-2, ALBERT, Megatron, …

- Hugging Face: implementation of Transformer models

- Tracking progress in NLP by Sebatian Ruder

- Write with Transformer

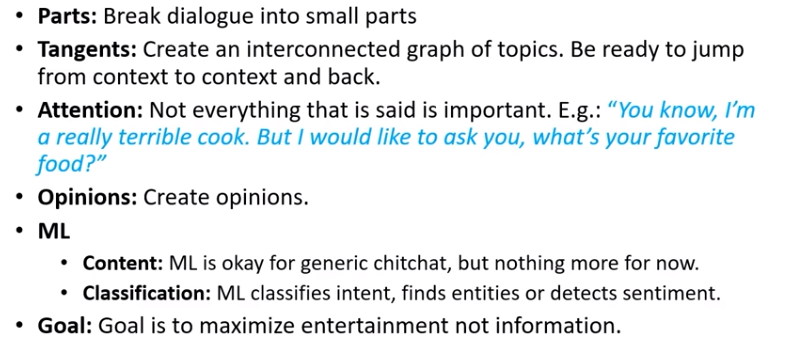

Alexa Prize and open domain conversations

Amazon open-sourced the topical-chat dataset, inviting researchers to participate in the Alexa Prize Challenge

Lessons learned

Developments in other NLP tasks

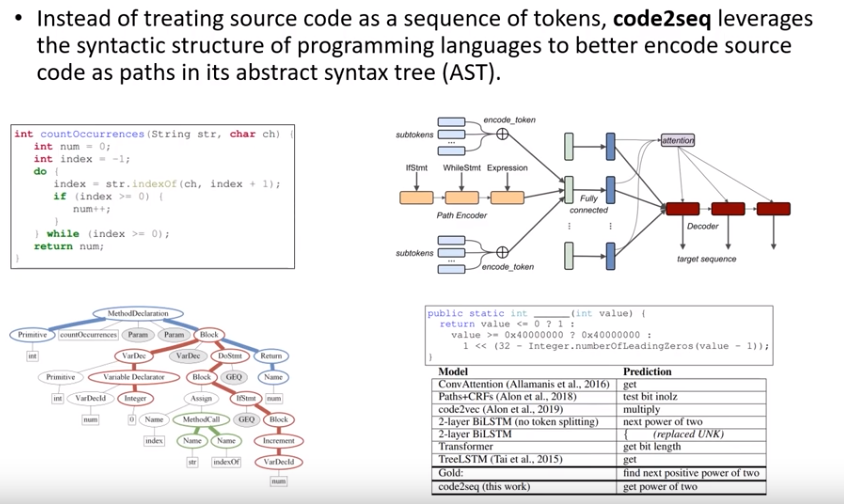

Seq2Seq

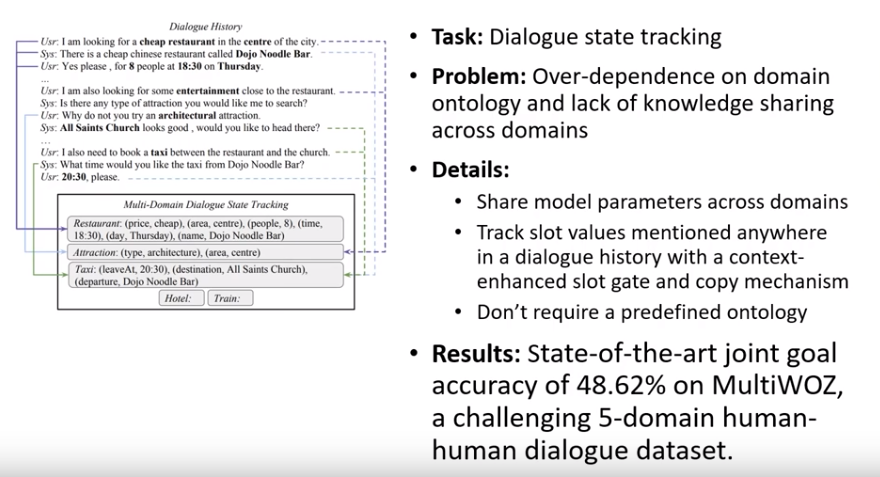

Multi-domain dialogue

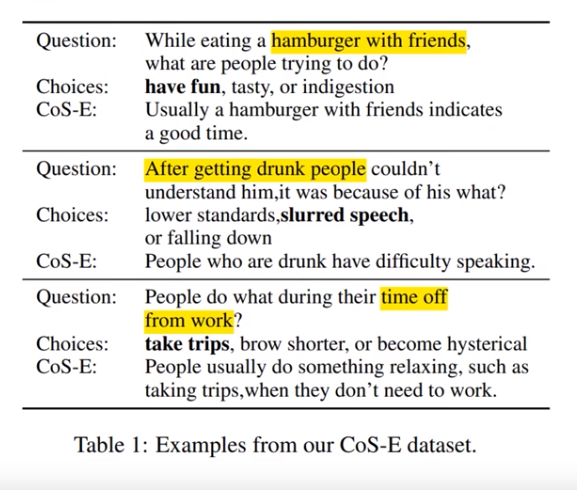

Common-sense reasoning

Hopes for 2020

Reasoning

Combining (commonsense) reasoning with language models

context

Extending language model context to thousands of words

Dialogue

More focus on open-domain dialogue

Video

Ideas and successes in self-supervised learning in visual data

So far, this is the summarization of the talk up to the NLP part. In the next few postings, I will be distilling and summarizing information from the remaining part of the talk.